Emotional Speech-Driven Animation with Content-Emotion Disentanglement

2023

Conference Paper

ps

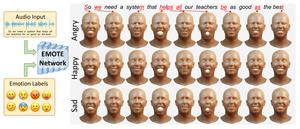

To be widely adopted, 3D facial avatars must be animated easily, realistically, and directly from speech signals. While the best recent methods generate 3D animations that are synchronized with the input audio, they largely ignore the impact of emotions on facial expressions. Realistic facial animation requires lip-sync together with the natural expression of emotion. To that end, we propose EMOTE (Expressive Model Optimized for Talking with Emotion), which generates 3D talking-head avatars that maintain lip-sync from speech while enabling explicit control over the expression of emotion. To achieve this, we supervise EMOTE with decoupled losses for speech (i.e., lip-sync) and emotion. These losses are based on two key observations: (1) deformations of the face due to speech are spatially localized around the mouth and have high temporal frequency, whereas (2) facial expressions may deform the whole face and occur over longer intervals. Thus, we train EMOTE with a per-frame lip-reading loss to preserve the speech-dependent content, while supervising emotion at the sequence level. Furthermore, we employ a content-emotion exchange mechanism in order to supervise different emotions on the same audio, while maintaining the lip motion synchronized with the speech. To employ deep perceptual losses without getting undesirable artifacts, we devise a motion prior in the form of a temporal VAE. Due to the absence of high-quality aligned emotional 3D face datasets with speech, EMOTE is trained with 3D pseudo-ground-truth extracted from an emotional video dataset (i.e., MEAD). Extensive qualitative and perceptual evaluations demonstrate that EMOTE produces speech-driven facial animations with better lip-sync than state-of-the-art methods trained on the same data, while offering additional, high-quality emotional control.

| Author(s): | Radek Daněček and Kiran Chhatre and Shashank Tripathi and Yandong Wen and Michael Black and Timo Bolkart |

| Year: | 2023 |

| Month: | December |

| Publisher: | ACM |

| Department(s): | Perceiving Systems |

| Bibtex Type: | Conference Paper (inproceedings) |

| Paper Type: | Conference |

| DOI: | 10.1145/3610548.3618183 |

| Event Name: | Siggraph Asia |

| Event Place: | Sydney, NSW, Australia |

| ISBN: | 979-8-4007-0315-7/23/12 |

| State: | Accepted |

| URL: | https://emote.is.tue.mpg.de/index.html |

| Links: |

arXiv

|

|

BibTex @inproceedings{EMOTE,

title = {Emotional Speech-Driven Animation with Content-Emotion Disentanglement},

author = {Daněček, Radek and Chhatre, Kiran and Tripathi, Shashank and Wen, Yandong and Black, Michael and Bolkart, Timo},

publisher = {ACM},

month = dec,

year = {2023},

doi = {10.1145/3610548.3618183},

url = {https://emote.is.tue.mpg.de/index.html},

month_numeric = {12}

}

|

|